Before Fine-Tuning: Deep Dive into Transformers

Published on Sunday, Jul 6, 2025

1 min read

Recently, I started exploring fine-tuning models but realized I needed a solid understanding of transformers to know what exactly I am fine-tuning. This post captures my structured notes, learnings, and explanations for transformers, gathered from reading and videos, to build a strong foundation.

🚩 Why Transformers Matter

Transformers are the backbone of modern NLP, forming the architecture behind models like BERT, GPT, LLaMA, and T5. Introduced in Attention Is All You Need, transformers removed sequential bottlenecks from RNNs/LSTMs by using attention mechanisms, enabling parallelization, scalability, and efficiency.

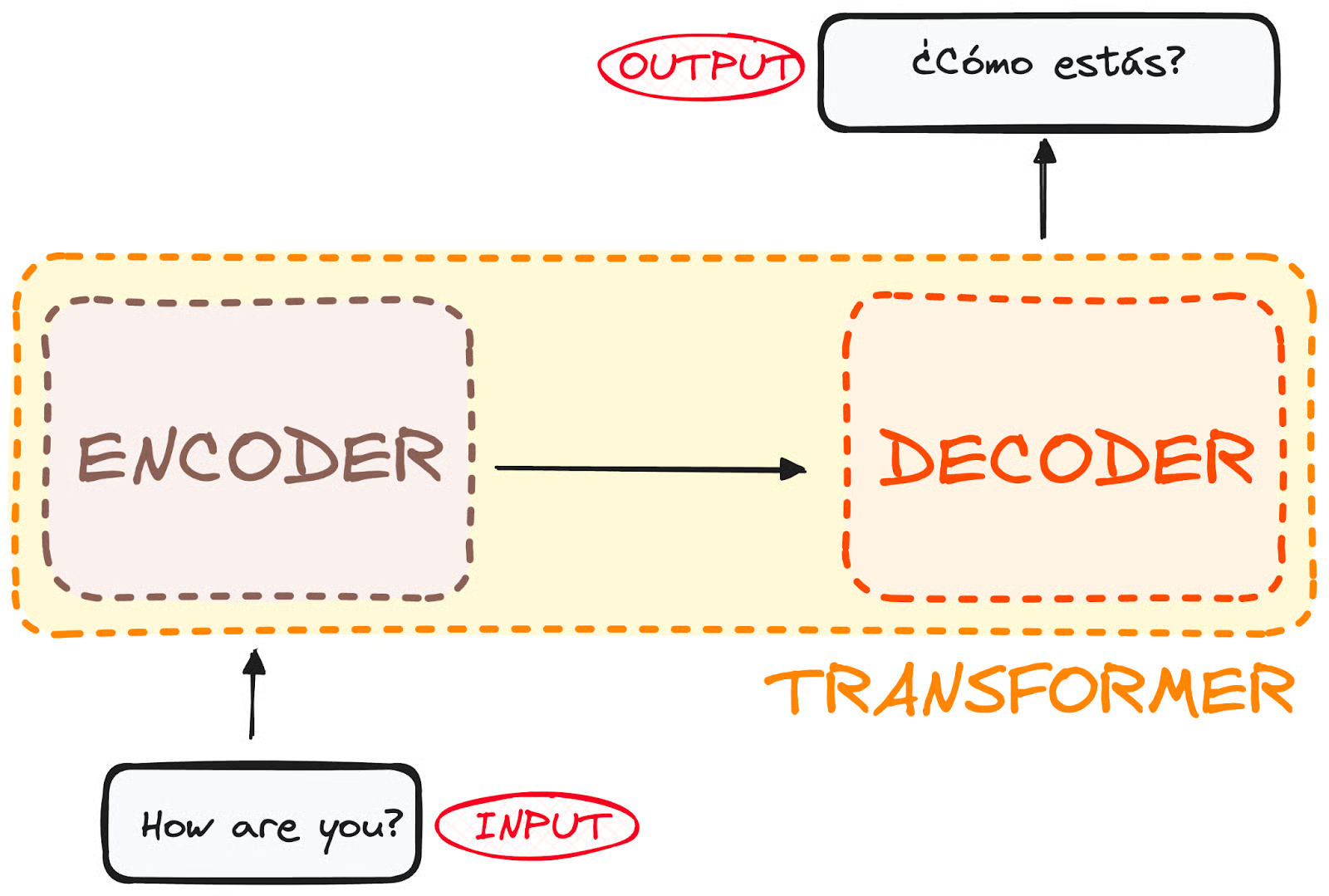

🛠️ The Architecture: Encoder-Decoder Structure

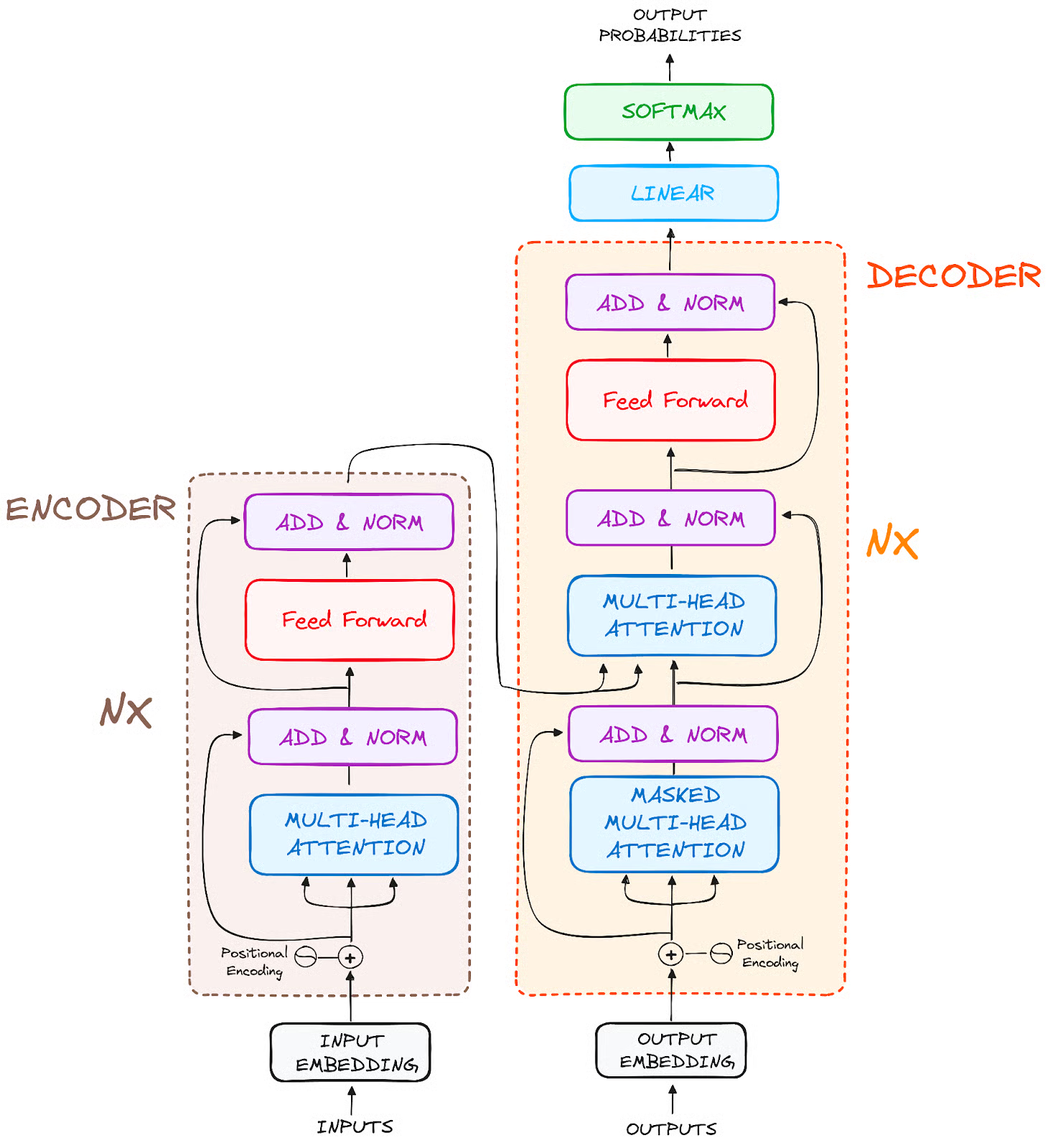

Transformers are composed of stacked encoder and decoder layers:

- Encoder layers: Encode the input sequence into context-rich representations.

- Decoder layers: Generate output sequences (useful in tasks like translation).

Each encoder layer contains:

- Multi-head self-attention

- Feed Forward Neural Network (FFNN)

- Layer Normalization + Residual Connections

Each decoder layer includes:

- Masked multi-head self-attention

- Encoder-decoder (cross) attention

- FFNN + Layer Normalization + Residuals

This structure enables deep representation learning while retaining interpretability.

✨ Why Transformers > RNNs/LSTMs

From my notes and DataCamp:

-

RNNs/LSTMs process sequentially, making them slow to train and hard to parallelize.

-

They struggle with long-term dependencies due to vanishing/exploding gradients despite LSTM’s gating.

-

Transformers use self-attention, allowing:

✅ Better long-range dependency handling

✅ Parallel training

✅ Scalability to large datasets

🧩 The Core: Self-Attention and Q, K, V

Self-attention enables each token to attend to every other token and compute weighted representations.

Key steps:

-

For each token:

- Compute:

- Query (Q)

- Key (K)

- Value (V)

- These are linear projections of the embeddings.

- Compute:

-

Compute attention scores using dot product:

-

Scale scores to stabilize gradients:

where = dimension of key vectors.

-

Apply softmax for normalized attention weights:

-

Multiply with value vectors:

This enables contextual representations, where tokens dynamically decide what to focus on.

🔎 Multi-Head Attention: Detective Analogy

Single attention head limitations: May capture only one type of relationship.

Multi-head attention:

- Projects Q, K, V into multiple subspaces.

- Performs attention in parallel across heads.

- Concatenates and projects outputs.

Analogy from the Medium blog:

Think of multiple detectives solving a case:

- One checks fingerprints.

- Another investigates the timeline.

- Another interviews witnesses.

Each focuses on different clues, combining them for a holistic understanding, similar to how multi-head attention captures various dependencies simultaneously.

⚡ Positional Encoding: Bringing Order to Tokens

Transformers lack recurrence and process tokens in parallel, requiring positional encodings to inject order information.

They:

✅ Help differentiate “Nitish loves AI.” vs. “AI loves Nitish.”

✅ Allow the model to learn position-based relationships.

Sinusoidal positional encoding:

where:

- : position in sequence

- : dimension index

- : embedding dimension

These are added to embeddings before feeding into attention layers.

🪐 Feed Forward Networks and Layer Normalization

After multi-head attention, each layer includes:

-

Position-wise FFNN:

-

Layer normalization and residual connections to stabilize and speed up training.

🪄 Putting It All Together

A single transformer encoder layer:

- Input + positional encodings

- Multi-head self-attention + residual + layer norm

- FFNN + residual + layer norm

Stacking multiple layers allows deep, hierarchical understanding of input sequences.

💡 Practical Insights (From My Notes and Readings)

✅ Transformers are parallelizable, allowing faster GPU training.

✅ They handle long dependencies efficiently.

✅ Core building blocks:

- Self-attention

- Multi-head attention

- Positional encodings

- FFNN layers

They are the foundation of models like GPT, BERT, LLaMA, and T5, which are pre-trained on large corpora and fine-tuned for downstream tasks.

📚 Resources I Used

These resources helped me clarify transformers intuitively:

If you found this helpful, let’s connect! I’m learning in public and would love to hear how you understood transformers or which part you found tricky while starting.

Happy Learning! 🚀